What is cache memory? People who interact with the internet every day, the term cache is of course no longer a foreign term. For example, when the performance of the web being accessed decreases, usually many recommend clearing the cache as the first aid for this condition.

Even though we are familiar with it every day to use it, many of us only know but don’t know and understand many things around us as well as cache. I have previously written about cache, but I am not satisfied because there are some that we have not discussed.

Therefore, in this article I will discuss again about what cache memory is, including its functionality. Stay tuned for the following reviews!

What is Cache Memory?

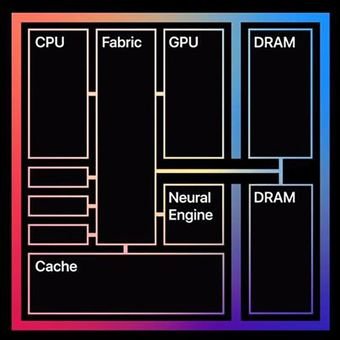

Cache memory, also called CPU memory, is high-speed static random access memory (SRAM) that can be accessed by a computer’s microprocessor faster than it can access random access memory (RAM). This memory is usually integrated directly into the CPU chip or placed on a separate chip that has a separate bus interconnection with the CPU.

The purpose of cache memory is to store instructions and program data that are used repeatedly in program operations or information that the CPU may need next. The computer processor can access this information quickly from the cache rather than having to get it from the main memory of the computer. Quick access to these instructions increases the overall speed of the program.

When the microprocessor processes data, the microprocessor appears first in cache memory. If it finds the instruction or data it is looking for from previous data reads, it does not have to perform the more time-consuming data reads from the larger main memory or other data storage device. Cache memory is responsible for speeding up computer operations and processing.

Implementation

What is cache memory is known now about cache memory history we will also explain. Mainframes used an early version of cache memory, but technology as it is known today began to be developed with the advent of microcomputers. With early PCs, processor performance increased much faster than memory performance, and memory was the bottleneck, the system slows down.

In the 1980s, the idea emerged that small amounts of more expensive and faster RAM could be used to increase the performance of cheaper, slower main memory. Initially, cache was separate memory from the system processor and was not always included in the chipset. Early PCs usually had cache memory from 16 KB to 128 KB.

With 486 processors, Intel adds 8 KB of memory to the CPU as Level 1 (L1) memory. 256 KB external Level 2 (L2) cache memory is used in this system. The Pentium processor sees external cache memory double again to 512 KB at the top. They also divide the internal cache memory into two caches: one for instructions and the other for data.

Processors based on Intel’s P6 micro architecture, introduced in 1995, were the first to include L2 cache memory into the CPU and allow all system cache memory to run at the same clock speed as the processor. Prior to P6, L2 memory external to the CPU was accessed at a much slower clock speed than the running speed of the processor, and slowed down system performance significantly.

Early memory cache controllers used a write-through cache architecture, in which data written to cache was also immediately updated in RAM. This approaches minimized data loss, but also slows down operations. With the later 486 PC-based, write-back cache architecture was developed, in which RAM was not updated immediately. Instead, data is stored in cache and RAM is only updated at certain intervals or in certain circumstances where data is lost or old.

After they are open and operational for a while, most programs use very little computer resources. That’s because instructions that are frequently referenced tend to get cached. This is why the system performance measurement for a computer with a slower processor but larger cache can be faster than a computer with a faster processor but less cache space.

Multi-tier or multilevel caching has become popular in server and desktop architectures, with different levels providing greater efficiency through managed tiering. Simply put, the less frequently a particular data or instruction is accessed, the lower the cache rate for that data or instruction is written.

What is Cache Memory Function?

After knowing what cache memory is, it is time to know the cache memory function. Apart from instruction and data caches, other caches are designed to provide special system functions. According to some definitions, the shared L3 cache’s design makes it a dedicated cache. Other definitions create separate instruction caches and data caches, and refer to each of them a special cache.

Translation lookaside buffers (TLBs) are also special memory caches whose function is to record virtual addresses to physical address translation. There are still other caches, Disk cache for example, can use RAM or flash memory to provide cache data similar to what memory cache does with CPU instructions. If data is accessed frequently from disk, it is cached to DRAM or silicon-based flash storage technology for faster access time and response.

SSD Caching vs. Main Storage

Dennis Martin, founder and president of Demartek LLC, explains the pros and cons of using solid-state drives as cache and as primary storage.

Dedicated caches are also available for applications such as web browsers, databases, network address binding and client-side Network File System protocol support. This type of cache may be distributed across multiple network hosts to provide greater scalability or performance for the applications that use it.

Locality

The ability of cache memory to improve computer performance depends on the concept of reference locality. Locality describes various situations that make the system more predictable, such as where the same storage location is accessed repeatedly, creating memory access patterns that cache memory relies on.

There are several types of locality. The two keys to cache are temporal and spatial. Temporal locality is when the same resource is accessed repeatedly over a short period of time. Spatial locality refers to the access to various data or resources that are in close proximity to one another.

Cache vs Main Memory

DRAM serves as the main memory of the computer, performing calculations on data retrieved from storage. DRAM and cache memory are volatile memories that lose their content when the power is turned off. DRAM is installed on the motherboard, and the CPU accesses it via a bus connection.

Example of Dynamic RAM

DRAM is usually about half that of L1, L2 or L3 cache memory, and is considerably less expensive. It provides faster data access than flash storage, hard disk drives (HDD) and cassette storage. It came into use in the last few decades to provide a place to store frequently accessed disk data to improve I / O performance.

DRAM must be refreshed every few milliseconds. Cache memory, which is also a type of random access memory, does not need to be refreshed. It is built right into the CPU to give the processor the fastest access to memory locations, and provides nanosecond speed access time for frequently referenced instructions and data. SRAM is faster than DRAM, but because the chip is more complex, it’s also more expensive to build.

Cache vs Virtual Memory

The computer has a limited amount of RAM and even less cache memory. When a large program or many programs are running, the memory can be fully used. To compensate for the lack of physical memory, a computer operating system (OS) can create virtual memory.

To do this, the OS temporarily transfers inactive data from RAM to disk storage. This approach increases the virtual address space by using the active memory in RAM and the idle memory in the HDD to form contiguous addresses that store applications and their data. Virtual memory allows a computer to run larger programs or multiple programs simultaneously, and each program operates as if it had unlimited memory.

In order to copy virtual memory into physical memory, the OS divides the memory into page files or exchanges files containing a specific number of addresses. The pages are stored on disk and when needed, the OS will copy them from disk to main memory and translate virtual addresses into real addresses.

That was what I discussed earlier about cache memory and what is cache memory. If your friends who read this article know more about what cache memory is, you can contact us by sending us an email. Hopefully this article about what is cache memory is useful for you. thanks.

image source: freepik.com